Developing conversational apps for Google Assistant leverages Google’s strong investment in the areas of machine learning, speech recognition, and language understanding.

The Google Assistant basically allows you to have a conversation with Google that helps you get things done and due to these investments in AI, the conversation can be completely natural.

You simply use your voice, ask in a natural way, and the assistant will help you.

What does this mean for a developer?

Well, the Google Assistant platform effectively has three parts.

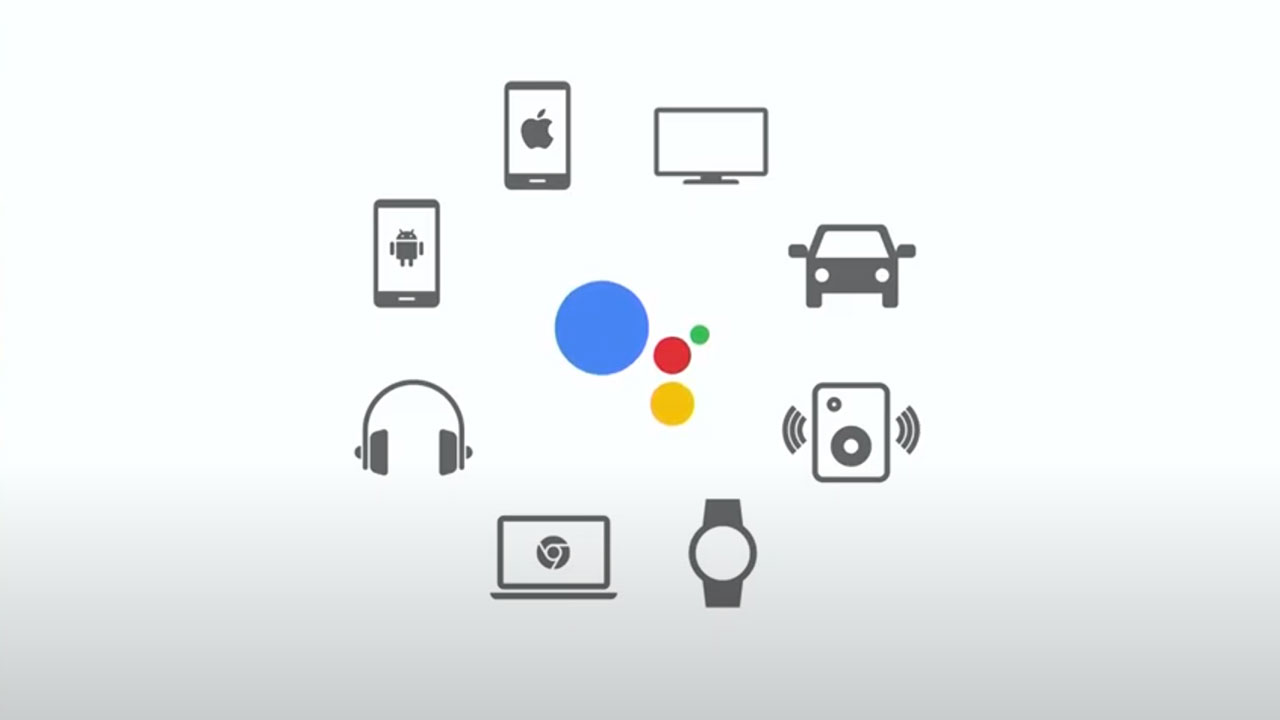

The first part is the device being used to interact with the assistant. It could be the mobile phone, Android Wear Watch, a Google Home smart speaker, or many other devices.

The second part, the Google Assistant, is the conversation between the user and Google. They can get things done just by talking with the assistant. And there are many things the user can do just by using the assistant directly.

Then the third part, which is actions on Google, allows developers to extend the assistant. Allowing a developer to implement a unique Google Assistant app.

How does a Google Assistant app work?

If you consider an example around a Personal Chef cooking app that suggests recipes based on what you want.

First, the user needs to invoke your app.

By saying a phrase like, “OK, Google, talk to Personal Chef.”

And the assistant will introduce your app.

From this point onwards, the user is now talking to your app.

Your app generates dialogue output, which is then spoken to the user.

The user then makes requests.

Your app processes it and replies back again.

The user has a two-way dialogue until the conversation is finished.

There are various ways to build this type of interaction.

One option is to use the conversation API and the Actions SDK.

Your assistant app receives a request containing the spoken text from the user as a string.

Google handles the speech recognition for you, and then you parse the strings, generate a response, and Google handles speaking this back to the user.

However, parsing natural language can be really difficult.

Fortunately, Google also provide tools that make this kind of thing really easy.

DialogFlow is one of these natural language processing tools.

And it’s a platform that makes it incredibly straightforward to build conversational experiences. You might not even have to write any code.

What is DialogFlow?

Basically, DialogFlow provides an intuitive user interface for creating conversational experiences.

You program in a few example sentences of things that a user might speak.

And you can specify which values you want to get from the user.

It then uses machine learning to understand the sentences and manage the conversation.

The key part here is that you no longer need to process raw strings. DialogFlow does this for you.

Once the user is talking to your Google Assistant app, they then start off with something that called the ‘user says phrase’, like find me a recipe for homemade cannoli.

The Google Assistant and DialogFlow then process this and find the appropriate intent to handle this phrase.

The phrase is processed to extract entities, which are important pieces of information that you’re looking for. The assistant app then calls your web hook with these entities and the action name, and the web hook can then do something with it and generates a response that is spoken back to the user.

We’ve looked at how conversational apps for Google Assistant are build and optimised for natural language using DialogFlow…but how do you make sure that you build Google Assistant apps that users will love?

Google offers a ton of tools and features that will help you create a magical experience for your users.

The most important thing to think about is the design of your app voice user interface.

Google’s developer website has a ton of content available that can teach you how to think like a designer and build experiences that feel natural and fun to use.

This should be the first place you go when you are designing a new app.

There are also a ton of Google Assistant platform features that you can use to delight your users.

Your app can support simple audio output on both the Google Home or other smart speakers or a mobile handset.

On a mobile device, you can also craft special responses that make the most of your device’s screen.

At the most basic, you can specify on screen display text that is distinct from the spoken response.

To guide the user in responding, you can provide suggestion chips. These supply suggested answers to a question that can be chosen with a single tap. Then, basic cards allow you to display substantial onscreen information in the form of images, text, and a hyperlink. The text there doesn’t have to be spoken out loud.

You can also use lists and carousels to provide a visual selection mechanism for users. A carousel shows images, titles, and descriptions for a small number of options. A list has capacity for substantially more, but the images are a lot smaller.

You can also affect the way your app’s speech sounds. You can play sound effects, alter the rate, pitch, and volume of speech, and control how words and numbers are read aloud. You can even layer background music and sounds, and you do all of this through SSML.

You might also have a conversation that needs to know the user’s name or location.

Here is one example of finding a local bookstore, and you need to know the zip code.

You can use the SDK to request permissions for name, coarse location, and precise location.

And when you invoke this function, the assistant will ask the user for permission using your app’s voice.

If you would like to link a user with their account on your own service, and you have an OAuth server, the Google Assistant can prompt users to link their account. At this point, the requesting user receives a card at the top of their Google Home app on their phone that provides a link to the login page. Once the user has completed the account linking flow on your web application, they can invoke your action, and your action can then authenticate calls to your services.

It’s worth noting that the OAuth point needs to be one that you own, rather than a third-party service.

If your experience involves shopping or payments, there is support for rich transactions that allow you to accept user payments and customers can also use whatever payment information they have on file with Google. Payments can be super easy.

Transactions also supports a shopping cart, delivery options, order summary, and payments. And the user can see a history of all their transactions.

The Google Assistant also supports home automation via our smart home integration. Device makers can easily integrate their existing devices with our Home Graph. The Home Graph knows the state of all connected devices. When you ask to dim the lights a little bit, it knows how to do that, allowing the user can make all kinds of requests, like make it warmer, turn off all the lights, how many lights are on.

The really are endless possibilities for developing conversational apps For Google Assistant.

Watch the video below as Daniel Imrie-Situnayake from Google Developers India, describes the key components of Actions on Google, shows you how to use tools such as Dialogflow to easily build your first app for the Google Assistant, and explores Voice User Interface (VUI) best practices to design compelling conversational user experience: